Created 3 years ago

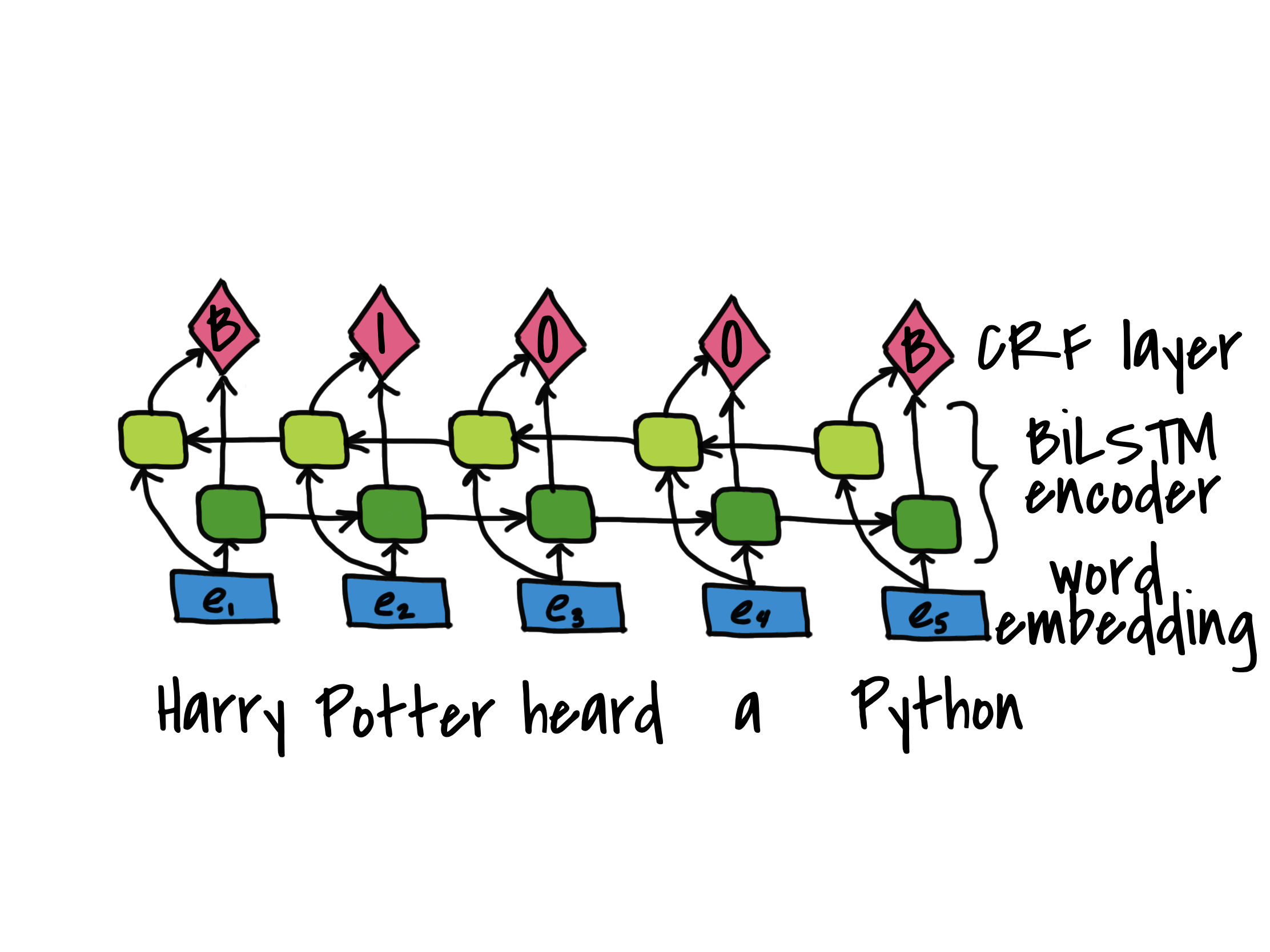

Advanced Tutorial: Named Entity Recognition using a Bi-LSTM with the Conditional Random Field Algorithm

Tutorial Link: https://pytorch.org/tutorials/beginner/nlp/advanced_tutorial.html

The Bi-LSTM is trained on both past as well as future information from the given data as word embeddings or vectors representing the input words.

Outline

-

Definitions

- Bi-LSTM

- CRF and potentials

- Viterbi

-

Helper Functions

-

Data

-

Create the Network

-

Train

-

Evaluate

Definitions

Bi-LSTM (Bidirectional-Long Short-Term Memory)

As we saw, an LSTM addresses the vanishing gradient problem of the generic RNN by adding cell state and more non-linear activation function layers to pass on or attenuate signals to varying degrees. However, the main limitation of an LSTM is that it can only account for context from the past, that is, the hidden state, h_t, takes only past information as input.