Learn practical skills, build real-world projects, and advance your career

Created 4 years ago

Training Deep Neural Networks on a GPU with PyTorch

Part 4 of "PyTorch: Zero to GANs"

This post is the fourth in a series of tutorials on building deep learning models with PyTorch, an open source neural networks library. Check out the full series:

- PyTorch Basics: Tensors & Gradients

- Linear Regression & Gradient Descent

- Image Classfication using Logistic Regression

- Training Deep Neural Networks on a GPU

- Image Classification using Convolutional Neural Networks

- Data Augmentation, Regularization and ResNets

- Generating Images using Generative Adverserial Networks

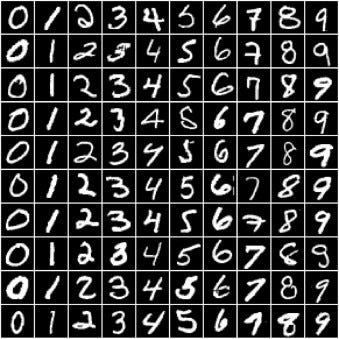

In the previous tutorial, we trained a logistic regression model to identify handwritten digits from the MNIST dataset with an accuracy of around 86%.

However, we also noticed that it's quite difficult to improve the accuracy beyond 87%, due to the limited power of the model. In this post, we'll try to improve upon it using a feedforward neural network.

Preparing the Data

The data preparation is identical to the previous tutorial. We begin by importing the required modules & classes.

import torch

import numpy as np

import torchvision

from torchvision.datasets import MNIST

from torchvision.transforms import ToTensor

from torch.utils.data.sampler import SubsetRandomSampler

from torch.utils.data.dataloader import DataLoaderdataset = MNIST(root='data/',

download=True,

transform=ToTensor())def split_indices(n, val_pct):

# Determine size of validation set

n_val = int(val_pct*n)

# Create random permutation of 0 to n-1

idxs = np.random.permutation(n)

# Pick first n_val indices for validation set

return idxs[n_val:], idxs[:n_val]